Best way to detect objects in images using ML Kit

Best way to detect objects in images using ML Kit

08 October 2020

ML Kit is a mobile SDK that brings Google’s expertise in machine learning to Android and iOS applications in a strong but easy to use kit. Whether you’re new to machine learning or experienced you can implement the features you need in just a few lines of code.

Currently ML Kit offers the ability to:

- Recognise text

- Recognise landmarks

- Face recognition

- Scan barcodes

- Label images

Let’s Start Integrating ML Kit

Part (1/3) (Setting up FCM and Xcode project):

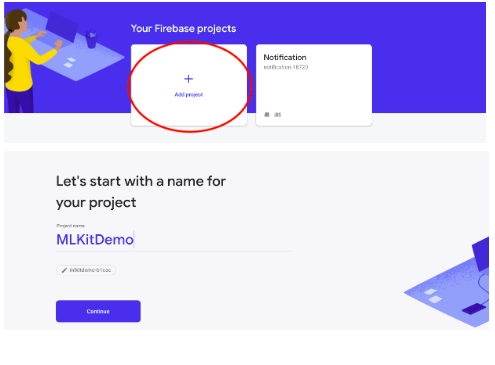

- Open FCM Console to create a new project.

- Add a new project.

- Open Xcode and create a new project.

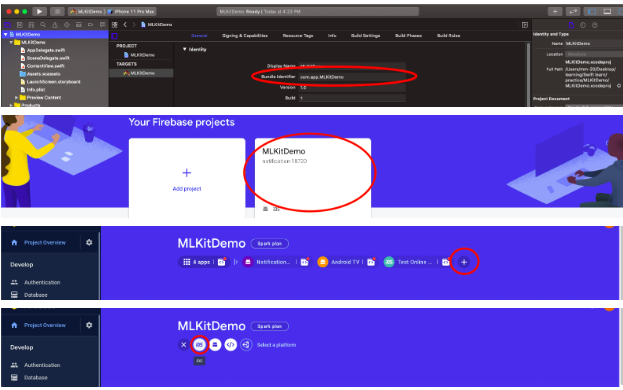

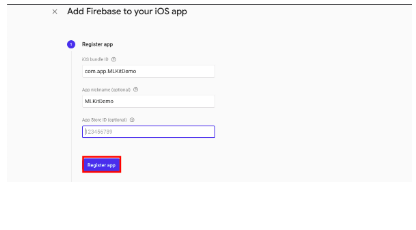

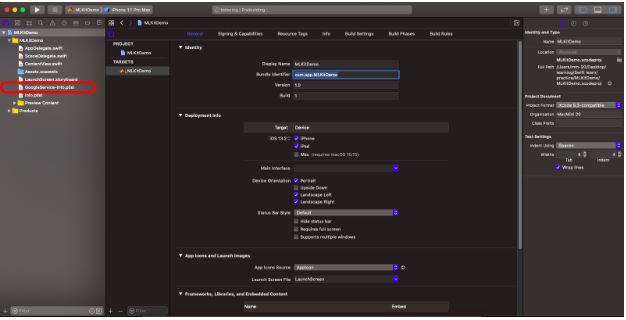

- Copy the bundle identifier and use that register new application in FCM console newly created project.

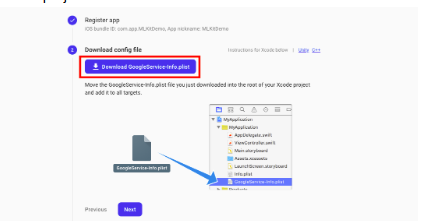

- Download the googleService-info.plist file from there and copy and paste that into your Xcode project.

Congrats you have successfully set up FCM and Xcode project.

Now it’s time to execute the next part.

Part (2/3) (Installing pods into your Xcode project):

Note: Make sure that you already have Cocoapods installed on your device.

If not follow these simple steps.

- Open Terminal and enter the following command:

sudo gem install cocoapods

If you have already installed Cocoapods skip the above section.

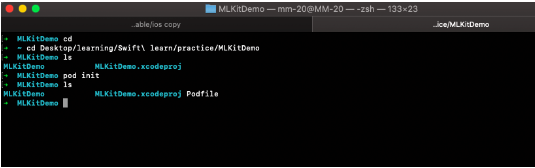

- Open Terminal and enter the following command:

cd <Path to your Xcode Project>

You are now in that directory.

- To create a pod, it’s quite simple. Enter the command:

pod init

- Now open Podfile by simply entering this command in terminal:

open -a Xcode podfil

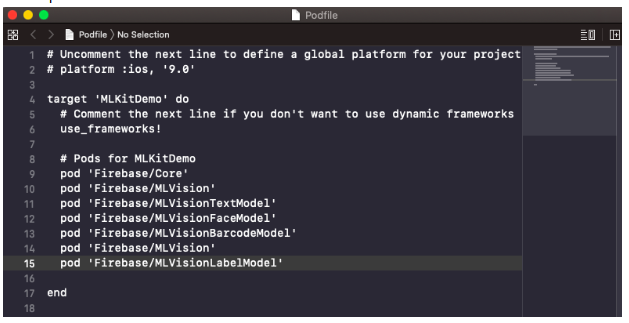

- Now add copy and paste these packages in podfile underneath # Pods for “<Your Poject name>

pod 'Firebase/Core' pod 'Firebase/MLVision' pod 'Firebase/MLVisionTextModel' pod 'Firebase/MLVisionFaceModel' pod 'Firebase/MLVisionBarcodeModel' pod 'Firebase/MLVision' pod 'Firebase/MLVisionLabelModel'

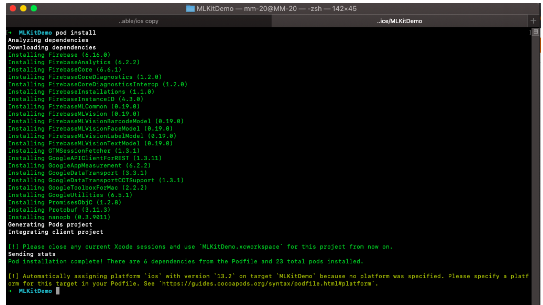

- Now, there’s only one thing remaining. Head back to Terminal and type:

pod install

This is going to take a few minutes so feel free to take a break. As Xcode is installing the packages that we are going to use. You will notice a new file when everything is finished with “.xcworkspace” extension.

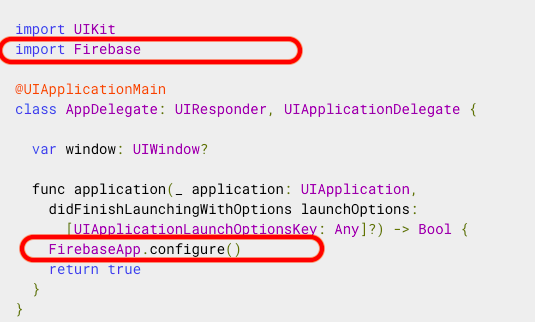

Open AppDelegate.swift

Once we’re in AppDelegate.swift, add two lines of code. To configure Firebase.

Part (3/3) (Understanding MLKit and How to use it):

Before Starting the first thing you need to do is capture image their are various ways to do these.

- Capture from camera.

- Pick from the gallery.

- Or add an image in you xcode project and use that.

Whichever way is easier for you you can continue with that.

Barcode Scanning:

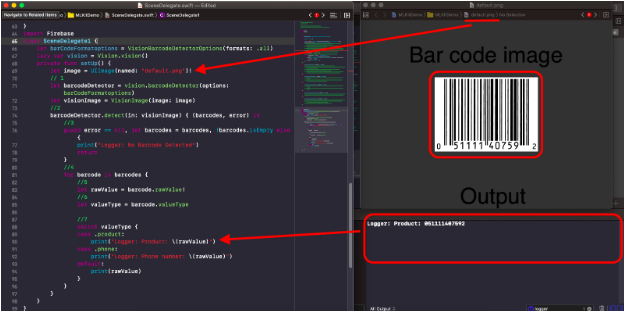

Now first things first you need to import Firebase in your viewController. Next, we need to define some variables that our Barcode Scanning function will use

The variable barCodeFormatoptions informs the BarcodeDetector which Barcode types to identify or scan. And the vision variable returns an instance of the Firebase Vision service.

Add the following lines of code

let barCodeFormatoptions = VisionBarcodeDetectorOptions(formats: .all)

lazy var vision = Vision.vision()

private func setUp() {

let image = UIImage(named: "default.png")!

// 1

let barcodeDetector = vision.barcodeDetector(options: barCodeFormatoptions)

let visionImage = VisionImage(image: image)

//2

barcodeDetector.detect(in: visionImage) { (barcodes, error) in

//3

guard error == nil, let barcodes = barcodes, !barcodes.isEmpty else {

print("Logger: No Barcode Detected")

return }

//4

for barcode in barcodes {

//5

let rawValue = barcode.rawValue!

//6

let valueType = barcode.valueType

//7

switch valueType {

case .product:

print("Logger: Product: \(rawValue)")

case .phone:

print("Logger: Phone number: \(rawValue)")

default:

print(rawValue)

}

}

}

}

- Firstly we define 2 variables: barcodeDetector which is a barcode detecting object of the Firebase Vision service. We set it to detect all types of barcodes.

- After that we define an image called visionImage which is the same image as the one we picked.

- Now we call the detection method and define two objects: barcodes and error. First, we handle the error. If there is an error or there are no barcodes recognized, we say “No Barcode Detected”. On console.

- Otherwise, we’ll use for-loop to run the same code on each barcode recognized. We define 2 constants: a rawValue and a valueType.

- Where the raw value of a barcode contains the data it holds. This can either be some text, a number, an image, etc.

- And the value type of a barcode states what type of information it is: an email, a contact, a link, etc.

- After that we simply print the raw value on the console with some nice custom message. We check what type it is and set the text of the resultView to something based on that. For example, in the case of a URL, we have the resultView text say “URL: ” and we show the URL.

Now it’s time to run the app to see output. Using these powerful tool you can do many things such as: Recognise text, Recognise landmarks, Face recognition and many more…….

Try implementing them and if you get stuck anywhere there is very good documentation provided by firebase.