Building a Microservices Ecosystem with Kafka Streams and KSQL

Building a Microservices Ecosystem with Kafka Streams and KSQL

07 April 2021

Kafka Streams is a library for streaming applications that transform input Kafka topics into output Kafka topics (calls to external services or updates to databases).

KSQL is SQL engine for Kafka. It allows SQL queries to analyze a stream of data in real time.

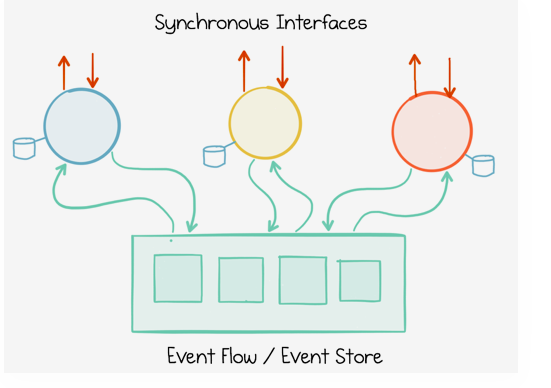

Nowadays, we invariably operate in ecosystems: groups of applications and services which work towards some higher level goal. When we make the systems event-driven they’re available with a spread of advantages. The primary is that the concept we’ll rethink the services simply as a mesh of remote requests and responses, decoupling each event source from its consequences.

The second comes from the belief that these events are themselves facts: a narrative that describes the evolution of the business over time. It represents a dataset in its own right—orders, payments, customers, or whatever it may be. We can store these facts in the infrastructure we use to broadcast them, linking applications and services.

These insights can do much to improve speed and agility, but the real benefits of streaming platforms come when embracing not only the messaging backbone but the stream processing API itself. Such streaming services do not stop. They focus on reshaping and redirecting it; branching substreams, recasting tables, rekeying to redistribute and joining streams. So this is a model that embraces parallelism by sensing the natural flow of the system.

Your System has State: So Let’s affect It

Making services stateless is widely considered to be an honest idea. They can be scaled out, cookie-cutter-style, free of the burdensome weight of loading data on startup. Web servers are a good example to increase the capacity for generating dynamic content. This requires you to live somewhere, so the system ends up bottlenecking on the data layer—often a database—sitting at the other end of a network connection.

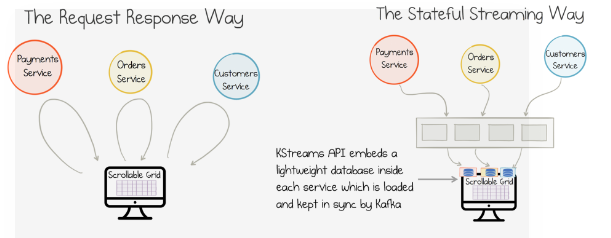

To exemplify now, we’ve a user interface that allows users to browse Order, Payment, and Customer information during a scrollable grid. As the user scrolls through the items displayed then the response time for each row needs to be snappy.

In traditional, stateless models each row on the screen that would require a call to all three services. This would be sluggish in practice, so caching would be added, along with some hand-crafted polling mechanism to keep the cache in an appropriate way.

Using an event-streaming approach, we materialize the info locally via the Kafka Streams API.

Kafka includes the range of features to make the storage, movement, and retention of state practical. So stream processors are proudly stateful. They let data be physically materialized wherever it is needed, throughout the ecosystem. This increases performance. It also increases autonomy. No remote calls are needed!

Scalable, Consistent, Stateful Operations in the Inventory Service

In the design diagram, there is an Inventory Service. When a user makes a purchase—it’s an iPad—the Inventory Service makes sure that there are enough iPads in stock for the order to be fulfilled. To do this it needs to happen as a single atomic unit. The service checks how many iPads there are in the warehouse. Next, one of the iPads must be reserved until such time as the user completes the payment, the iPad ships, etc. The following four actions to be performed inside each service instance:

- Read an Order message

- Validate whether there is enough stock available for that Order

- Update the State Store of “reserved items” to reserve the iPad so no one else can take it.

- Send out a message that Validates the order.

What is neat about the approach is its ability to scale the atomic operations out across many threads or machines. There is no remote locking and no remote reads. This works reliably because it ensures two things:

- Kafka’s transactions feature is enabled.

- Data is partitioned by ProductId before the reservation operation is performed.

The first point should be obvious. Kafka’s transactions ensure atomicity. Partitioning by ProductId is a little more subtle. Partitioning ensures all orders for iPads sent to a single thread in service instances, guaranteeing in order execution. Orders for other products will be sent elsewhere.

Summing Up

When building services using a Streaming Platform, some will be stateless: simple functions take an input, perform a business operation and produce an output. Some will be stateful, but read-only, as in when views need to be created so that it can serve remote queries. Others need to both read and write state, either inside the Kafka ecosystem or by calling out to other services or databases. Having all approaches available makes the Kafka Streams API powerful tool for building event-driven services.