Automatically Scan Cloud Storage Buckets For Sensitive Data

Automatically Scan Cloud Storage Buckets For Sensitive Data

Google Cloud Storage is object storage designed to store large, unstructured data sets and to access your data on Google’s infrastructure.

Most of the organizations use various public cloud storage platforms, for many use cases such as from serving data, to data analytics, to data archiving. There are extreme chances to store confidential or sensitive information in it. Subsequently, security in the cloud is essential as there are chances to disclose such data.

Secure Storage Buckets

To keep data more secure in a bucket, the first step is to make sure that only the right individuals or groups have access. The favored method for controlling access to buckets and objects is to use Identity and Access Management (IAM) permissions. Regardless of whether you’ve set the proper permissions, it’s important to know whether there is any sensitive data stored in a Cloud Storage bucket.

Consider that as an employee you need to periodically share reports with a partner outside of your organization, and these reports cannot contain any sensitive elements such as Personally Identifiable Information (PII). In such a case, you just create a storage bucket, upload data to it, and allow access to your partner. However, it may happen that someone uploads the wrong file which contains the confidential information or doesn’t know that they aren’t supposed to upload PII.

Cloud Data Loss Prevention (DLP) helps you to understand and manage such sensitive data. It provides fast, scalable classification and redaction for sensitive data elements. Using the Data Loss Prevention API and Cloud Functions, you can automatically scan this data before it is uploaded to the shared storage bucket.

Building buckets and classification pipeline

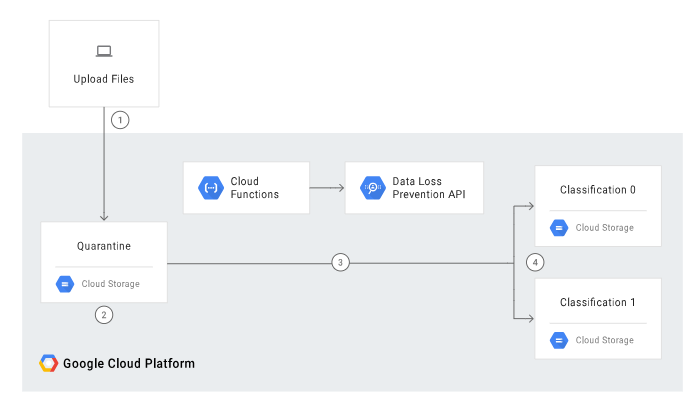

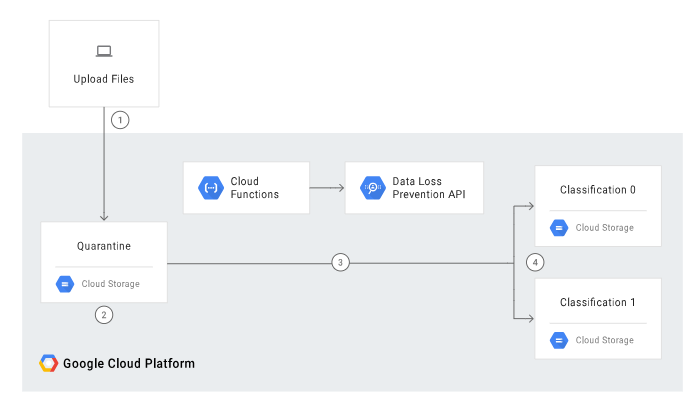

Let’s see how we can scan the storage bucket and classify data in a separate bucket.

- Upload files to Cloud Storage Bucket.

- Invoke a Cloud Function.

- The Data Loss Prevention API detects and classifies the data.

- The data is moved to the appropriate bucket.

The overall setup is shown in the following diagram,

Create Cloud Storage buckets

- Simply we need to create three buckets – one in which to upload data (Upload bucket – Quarantine), one to share (Share bucket – Classification 0) and one for any sensitive data (Restrict bucket – Classification 1) that gets flagged.

- Make sure to configure the access appropriately so that relevant users can put data in the “Upload” bucket.

- In the case where the data needs to be shared with the partner outside of your organization “Share bucket” is used.

- Data which is detected as sensitive is classified into the “Restrict” bucket.

Create a Cloud Pub/Sub topic and subscription

- It will notify you when file is uploaded in the bucket and processing is completed.

Create the Cloud Functions

- Create a Cloud Function that is invoked when an object is uploaded to Cloud Storage.

- Create another Cloud Function that invokes the DLP API when a message is received in the Cloud Pub/Sub queue.

Upload files to the source bucket

- After uploading the files in source bucket the Cloud Function will be invoked.

- The function uses the Data Loss Prevention API to detect and classify the files and move them to the appropriate buckets.