Working with Kafka

Working with Kafka

16 July 2021

Kafka is used for enabling communication between producers and customers victimization message-based topics. Apache Kafka is a quick, scalable, fault-tolerant, publish-subscribe electronic communication system means that it communicates within the message format, a message is often in byte stream or string. Basically, it styles a platform for high-end new generation distributed applications. one among the simplest options of Kafka is, it’s extremely on the market and resilient to node failures and supports automatic recovery. This feature makes Apache Kafka ideal for communication and integration between parts of large-scale knowledge systems in real-world knowledge systems.

Components of Kafka

- Kafka producer.

- Kafka consumer

Kafka Producer:

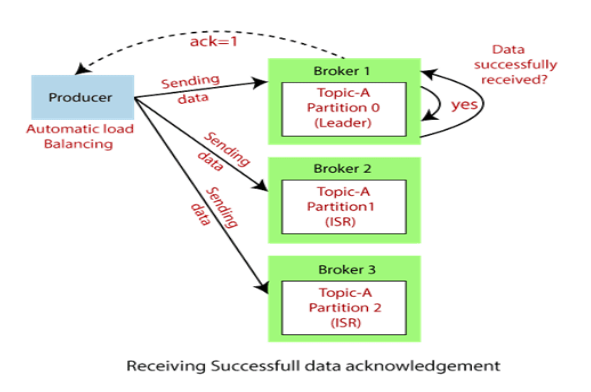

Kafka Producers are the source stream of an application. When we have to generate tokens or messages and then publish it to one or more topics in the Kafka cluster, we use the Kafka Producer. Also, the Producer API from Kafka helps to pack the message or token and deliver it to Kafka Server.

Kafka producers are responsible for writing records to topics. Typically, this means writing a program using the KafkaProducer API.

The central part of the KafkaProducer API is KafkaProducer class that provides an option to connect a Kafka broker in its constructor with the following methods.

KafkaSimpleProducerConfig config = new KafkaSimpleProducerConfig(kafkaURI); kafkaProducer = new KafkaProducer<>(config); getProducerInstance().send(event, new Event<>(String.valueOf(partitionKey), message, timestamp, header));

Useful methods of Kafka Producer API:

- flush(): This method is used to ensure that all the previous events are completed.

- metrics(): This method is used for getting the MAP partition metadata.

Kafka Consumer:

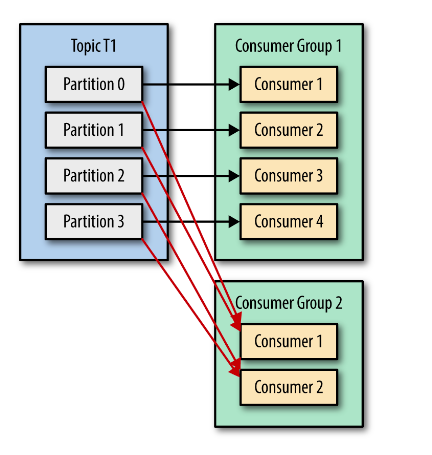

Kafka consumers are the clusters responsible for receiving records from one or more topics and one or more partitions of a topic. Consumers subscribing to a topic can happen manually or automatically, typically, this means writing a program using the KafkaConsumer API.

The KafkaConsumer class has two generic type parameters. Just as producers can send data (the values) with keys, the consumer can read data by keys. In this example both the keys and values are strings.

Consumer<byte[], byte[]> rewardGatewayConsumer = new KafkaConsumer<byte[], byte[]>( getConfig(applicationProperties.getAfsKafkaConfig().getRewardGatewayTokenEvent()), rewardGatewayConsumerEventHandler); rewardGatewayConsumer.start();