Merging CSV Files Using Cloud Services

Merging CSV Files Using Cloud Services

We are using the cloud storage bucket to store the data and BigQuery to upload the data from the Storage bucket and merge the files.

BigQuery

BigQuery is a low cost analytics data warehouse. Big Query does not manage the infrastructure and it doesn’t need a database administrator which benefits us to focus on analyzing data and use SQL.

The important properties of BigQuery are:

- To load and export the data

- To query and view the data

- To manage the data

Cloud Storage

Cloud storage is an online data storage where you will create a bucket with the unique name and store the data in it. Storage bucket can be located in the Regional or Multi-Regional as per the requirement. It’s a great advantage to the companies who use Cloud storage as they can pay for what they use depending on the size of storage.

We create a bucket and upload the file into the bucket. We will upload the data from the GCS storage bucket into the BigQuery and then query the data to merge the files.

Limitations to load the file in the BigQuery:

Files such as Wildcards and CSV lists are not supported in the BigQuery while uploading the data from the local machine. Though if you try to upload the file which is not supported then, it throws error.

For the compatible file format of the Big Query, the size of the file should be equal to the 10 MB or less than it and it should contain less than 16,000 rows.

Limitations while loading CSV data from Cloud Storage into BigQuery:

- The CSV files do not support nested or recurring data.

- Loading uncompressed data is easier than loading the compressed data.

- CSV or JSON file values must use the dash (-) separator in DATE columns and the date must be in the yyyy-mm-dd (year-month-day) format when you upload.

- Timestamp values must use a dash (-) separator for CSV or JSON data. The hh:mm:ss (hour-minute-second) portion of the timestamp should have a colon (:) separator.

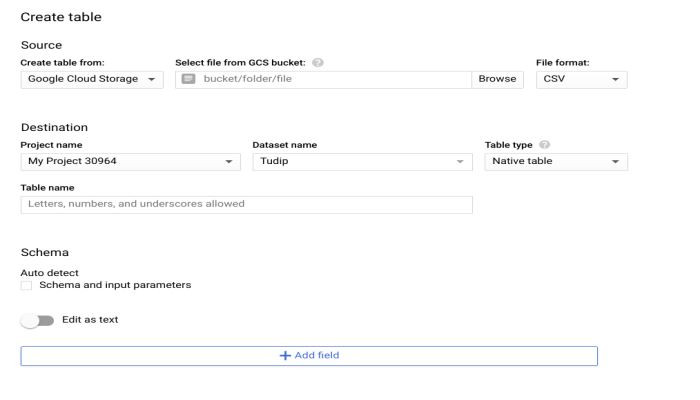

Loading CSV data into a BigQuery table

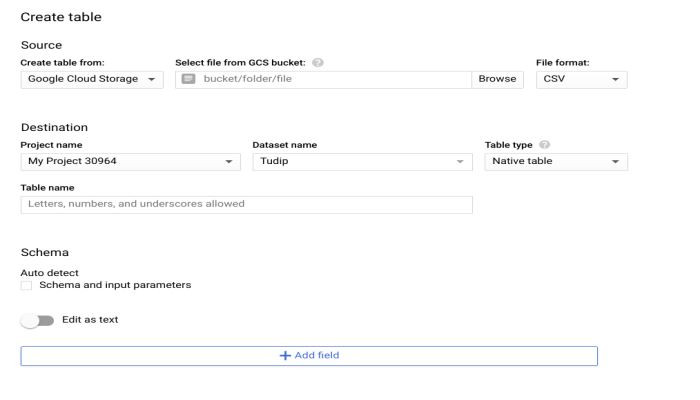

In the Big Query console, go to the dataset and create a new table. While creating the table, you need to provide the Source Table and Destination Table.

Source Table:

- For the source table, the location should be selected as Cloud Storage and enter the Cloud Storage URI in the source field.

- The location of the Cloud Storage bucket and the dataset that contains the tables should be in the same location.

- Select comma-separated values (CSV) as the file format.

Destination Table:

- Enter the table name and select the dataset name appropriately in the Big Query.

In the Schema section, you can either check mark the Schema definition as Automatically detect the schema or create the table by choosing “Edit as Text” to manually enter the data type.

Please refer to the diagram.

After creating the two different tables with the same CSV format, you can query it and merge the tables.

We run the simple query to merge the files using the UNION ALL command and make sure to give the limit to get the exact data.

The final output of the merged table is the combination of both the tables with complete data.

You can store the final data as CSV Google Drive, JSON Google Drive, CSV Local File, BigQuery Table or Google Sheets.