How to Protect Data in BigQuery

How to Protect Data in BigQuery

13 April 2022

You can protect your Bigquery data using Scan this data using Cloud DLP and then protect it using Cloud KMS keys.

1: Using Cloud DLP to scan BigQuery data

Knowing wherever your sensitive data exists is often the primary step in ensuring that it’s properly secured and managed. This information will help to reduce the risk of exposing sensitive details such as credit card numbers, medical information, Social Security numbers, driver’s license numbers, addresses, full names, and company-specific secrets. Periodic scanning of your data can also help you with compliance requirements and ensure best practices are followed as your data grows and changes with your use. To help meet compliance requirements, use Cloud Data Loss Prevention (Cloud DLP) to scan your BigQuery tables and to protect your sensitive data.

Cloud DLP is a fully-managed service allowing Google Cloud customers to identify and protect sensitive data at scale. Cloud DLP uses more than 100 predefined detectors to identify patterns, formats, and checksums. Cloud DLP also provides a set of tools to de-identify your data including masking, tokenization, pseudonymization, date shifting, and more, all without replicating customer data.

Cloud DLP includes Over 120 built-in information type detectors and the ability to define custom infoType detectors using dictionaries, regular expressions, and contextual elements. De-identification techniques includes redaction, masking, format-preserving encryption, date-shifting, and more. The ability to detect sensitive data within streams of data, structured text, files in storage repositories such as Cloud Storage and BigQuery, and even within images. Analysis of structured data to help understand its risk of being re-identified, including computation of metrics like k-anonymity, l-diversity, and more.

Before you begin:

- Get aware of Cloud DLP pricing and how to keep Cloud DLP costs under control.

- Enable the Cloud DLP API.

- Ensure that the user creating your Cloud DLP jobs is granted an appropriate predefined Cloud DLP Cloud IAM role or sufficient permissions to run Cloud DLP jobs.

Note: When you enable the Cloud DLP API, a service account is created with a name similar to service-<project_number>@dlp-api.iam.gserviceaccount.com. This service account is granted the DLP API Service Agent role which allows the service account to authenticate with the BigQuery API. For more information, see Service account on the Cloud DLP IAM permissions page.

Scanning BigQuery data using the Cloud Console:

To scan BigQuery data, you create a Cloud DLP job that analyzes a table. You can scan a BigQuery table quickly by using the Scan with DLP option in the BigQuery Cloud Console.

- To scan a BigQuery table using Cloud DLP:

- Open the BigQuery web UI in the Cloud Console.

- In the Resources section, expand your project and dataset, and select the BigQuery table that you want to scan.

- Click Export > Scan with DLP (Beta). The Cloud DLP job creation page opens in a new tab.

- For Step 1: Choose input data, the values in the Name and Location sections are automatically generated. Also, the Sampling section is automatically configured to run a sample scan against your data. You can adjust the number of rows in the sample by choosing Percentage of rows for the Limit rows by field. You can also change the number of rows sampled by adjusting the value in the Maximum number of rows field.

- When you complete this step then click on the Continue button.

- (Optional) For Step 2: Configure detection, you can configure what types of data to look for, called infoTypes. You can select from the list of pre-defined infoTypes, or you can select a template if one exists.

- When you complete this optional step then click on the Continue button.

- (Optional) For Step 3: Add actions, enable Save to BigQuery to publish your Cloud DLP findings to a BigQuery table. If you don’t store findings, the completed job will only contain statistics about the number of findings and their infoTypes. Saving findings to BigQuery saves details about the precise location and confidence of each individual finding.

- (Optional) If you enabled Save to BigQuery, in the Save to BigQuery section:

- For Project ID enter the project ID where your results are stored.

- For Dataset ID enter the name of the dataset that stores your results.

- (Optional) For Table ID enter the name of the table that stores your results. If no table ID is specified, a default name is assigned to a new table similar to the following: dlp_googleapis_<date>_<random-number>. If you specify an existing table, findings are appended to it.

- Click on the Continue button.

- (Optional) For Step 4: Schedule, configure a time span or schedule by selecting either Specify time span or Create a trigger to run the job on a periodic schedule.

- Click on the Continue button.

- (Optional) On the Review page, examine the details of your job.

- Click on the Create button.

- After the Cloud DLP job completes, you are redirected to the job details page, and you’re notified via email. You can view the results of the scan on the job details page, or you can click the link to the Cloud DLP job details page in the job completion email.

- If you chose to publish Cloud DLP findings to BigQuery, on the Job details page, click View Findings in BigQuery to open the table in the BigQuery web UI. You can then query the table and analyze your findings.

2: Protecting data with Cloud KMS keys

Cloud Key Management Service allows you to create, import, and manage cryptographic keys and perform cryptographic operations in a single centralized cloud service. You can use these keys and perform these operations by using Cloud KMS directly, by using Cloud HSM or Cloud External Key Manager, or by using Customer-Managed Encryption Keys (CMEK) integrations within other Google Cloud services.

With Cloud KMS you are the ultimate custodian of your data, you can manage cryptographic keys in the cloud in the same ways you do on-premises, and you have a provable and monitorable root of trust over your data.

By default, BigQuery encrypts customer content stored at rest. BigQuery handles and manages this default encryption for you without any additional actions on your part. First, data in a BigQuery table is encrypted using a data encryption key. Then, those data encryption keys are encrypted with key encryption keys, which is known as envelope encryption. Key encryption keys do not directly encrypt your data but are used to encrypt the data encryption keys that Google uses to encrypt your data. For more information, see Key management.

Before you begin:

- Understand datasets, tables, and queries.

- Decide whether you are going to run BigQuery and Cloud KMS in the same Google Cloud project, or in different projects. For documentation example purposes, the following convention is used:

- project_id is the project ID of the project running BigQuery

- project_number is the project number of the project running BigQuery

- kms_project_id is the project ID of the project running Cloud KMS (even if this is the same project running BigQuery)

- BigQuery is automatically enabled in new projects. If you are using a pre-existing project to run BigQuery, enable the BigQuery API.

- For the Google Cloud project that runs Cloud KMS:

- Enable the Cloud KMS API.

- Create a key ring and a key as described in Creating key rings and keys. Create the key ring in a location that matches the location of your BigQuery dataset:

- Any multi-regional dataset should use a multi-regional key ring from a matching location. For examples, a dataset in region US should be protected with a key ring from region us, and a dataset in region EU should be protected with a key ring from region europe.

- Regional datasets should use a matching regional keys. For example, a dataset in region asia-northeast1 should be protected with a key ring from region asia-northeast1.

- The global region is not supported for use with BigQuery.

Grant encryption and decryption permission:

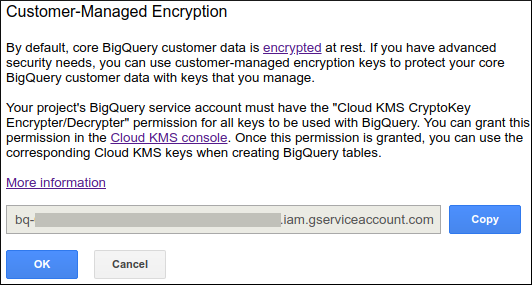

Use the Google Cloud Console to determine the BigQuery service account ID, and provide the service account with the appropriate role to encrypt and decrypt using Cloud KMS.

Determine the service account ID:

- Go to the BigQuery web UI.

- Click the down arrow icon down arrow icon next to your project name in the navigation and then click Customer-Managed Encryption.

- A user dialog opens to show you the service account that requires encryption and decryption permission:

- Click Copy to copy the service account ID to your clipboard and then click OK to close the user dialog.

Assign the Encrypter/Decrypter role:

- Open the Security page in the Cloud Console.

- Select your project and click Continue.

- Identify the encryption key to which you want to add the role.

- If the bq-<project_number>@bigquery-encryption.iam.gserviceaccount.com service account isn’t already on the members list, it doesn’t have any roles assigned to it. Click Add member and enter the email address of the service account, bq-<project_number>@bigquery-encryption.iam.gserviceaccount.com.

- If the service account is already on the members list, it has existing roles. Click the current role drop-down list for the bq-<project_number>@bigquery-encryption.iam.gserviceaccount.com service account.

- Click the drop-down list for Role, click Cloud KMS, and then click the Cloud KMS CryptoKey Encrypter/Decrypter role.

- Click Add or Save to apply the role to the bq-<project_number>@bigquery-encryption.iam.gserviceaccount.com service account.

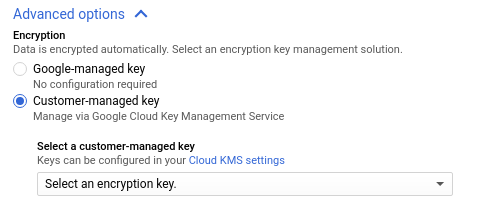

Create an empty table protected by Cloud KMS:

- Click the down arrow icon down arrow icon next to your dataset name in the BigQuery web user interface and then click Create new table.

- On the Create table page, fill in the information needed to create an empty table with a schema definition. Before you click Create Table, set the encryption type and specify the Cloud KMS key to use with the table:

- Click on the drop-down list for Encryption Type and select Customer-Managed Encryption.

- For Customer-Managed Encryption Key, enter the resource ID for the key.

- Click Create Table.

Protect query results with Cloud KMS key:

- Click the Compose query button in the BigQuery web UI.

- Enter a valid BigQuery SQL query in the New Query text area.

- Click Encryption Type and select Customer-Managed Encryption.

- For Customer-Managed Encryption Key, enter the resource ID for the key.

- Click Run Query.

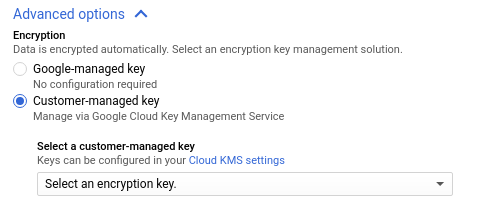

Load a table protected by Cloud KMS:

Protect a load job destination table with a customer-managed encryption key by specifying the key when you load the table.

- Open the BigQuery web UI in the Cloud Console.

- In the navigation panel, in the Resources section, expand your project and select a dataset.

- On the right side of the window, in the details panel, click Create table.

- Enter the options you want to use for loading the table, but before you click Create table, click Advanced options.

- Under Encryption, select Customer-managed key.

- Click the Select a customer-managed key dropdown and select the key to use.

If you don’t see any keys available, enter a key resource ID. - Click Create table.

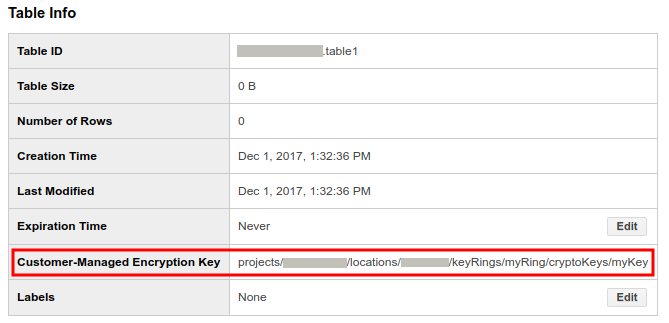

Determine if a table is protected by Cloud KMS:

- In the BigQuery web UI, click the blue arrow to the left of your dataset to expand it, or double-click the dataset name. This displays the tables and views in the dataset.

- Click the table name.

- Click Details. The Table Details page displays the table’s description and table information.

- If the table is protected by Cloud KMS, the Customer-Managed Encryption Key field will display the key resource ID.

Change the Cloud KMS key for a BigQuery table:

To change the Cloud KMS key of an existing CMEK-protected table, you can run an ALTER TABLE query in BigQuery, use the API, or use the bq command-line tool.

There are two ways to modify the Cloud KMS key using the API and command-line tool: update or cp. If you use update, you can change the Cloud KMS key used for a KMS-protected table. If you use cp, you can change the Cloud KMS key used for a CMEK-protected table, change a table from default encryption to CMEK-protection, also change a table from CMEK-protection to default encryption. An advantage of update is it is faster than cp and it allows the use of table decorators.

- Go to the BigQuery web UI.

- Click Compose query.

- Type your DDL statement into the New Query text area. For the kms_key_name value, specify the resource ID of the key that you want to use to protect the table.

<code> #standardSQL ALTER TABLE mydataset.mytable SET OPTIONS ( kms_key_name="projects/[PROJECT_ID]/locations/[LOCATION]/keyRings/[KEYRING]/cryptoKeys/[KEY]" ) </code>