Data processing on Google Cloud Platform

Data processing on Google Cloud Platform

Data processing options available on GCP is part of its broader data analytics.

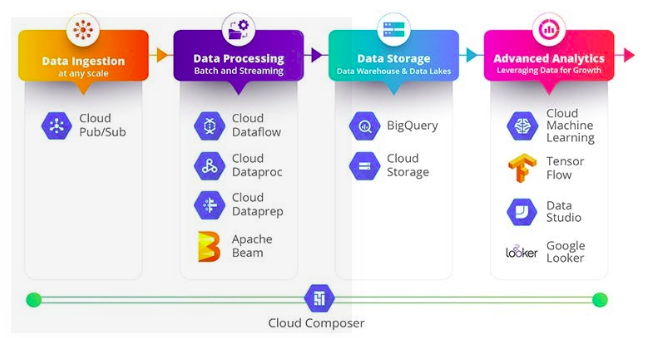

Here are high-level components of the pipeline:

Agile Data Options in GCP

The initial step is to get the data into the platform from various disparate sources, generally referred to as Data Ingestion. After we receive data into the data pipeline, data is made useful for analytical purposes. This processed data stored in a modern data warehouse, or data lake, and then consumed by enterprise users for operational and analytical reporting, machine learning and advanced analytics or AI use cases.

Data Ingestion

Cloud Pub/Sub:

To improve reliability data ingestion and data movement mechanism follows Publish/Subscribe(Pub/Sub) pattern. You are able to scalable up to 100GB/sec with consistency and this is enough to satisfy the scale of almost any enterprise. The data is retained for 7 days by default although it can be retained several days. This service is deeply integrated with other components of the GCP analytics platform.

Data Processing

Cloud Dataflow:

Cloud Dataflow is used for streaming data processing in real-time. The traditional approach to building data pipelines was to create a separate codebase (Lambda architecture patterns) for batch, micro-batch, and stream processes. Cloud Data can create a unified programming model so that the users can process the workloads with the same code base. It also simplifies operations and management.

Dataproc:

It is a fully managed Apache Hadoop and Spark service, which allows the user to use all familiar open-source Hadoop tools like Spark, Hadoop, Hive, Tez, Presto, Jupyter, etc. and then also to tightly integrate it to the services within a Google Cloud Platform ecosystem. This is because it gives flexibility to rapidly define clusters. It also defines machine types to be used for master and data nodes.

There are majorly two types of Dataproc clusters that can be provisioned in Google Cloud Platform. The first one is known as the ephemeral cluster (cluster is defined when a job is submitted, scaled up or down as needed by the job, and is deleted once the job is completed). The second one is the long-standing cluster, where the user creates a cluster (comparable to an on-premise cluster) with a defined number of the minimal and maximum number of nodes. Here, the jobs will be executed within the constraints, and when the jobs are completed, the cluster scales down to the minimum constraint. Depending on the use case and processing power needed, this gives the flexibility to define the type of clusters.

Dataproc is an enterprise-ready service with high availability and high scalability. It allows both horizontal scaling (scales to the tune of 1000s of nodes per cluster) as well as vertical scaling (configurable computer machine types, GPUs, Solid-state drive storages, and persistent disks).